Grok Users Create Sexualized AI Image Edits Without Consent

© Grok

A recently introduced image-editing option linked to Grok, the AI chatbot from Elon Musk’s xAI and integrated on the social network X, has ignited controversy after users discovered they could easily alter existing photos — including creating sexually suggestive images — with no consent or notification to the original creator. Critics say the tool pushes artificial intelligence into a legal and ethical “gray zone.”

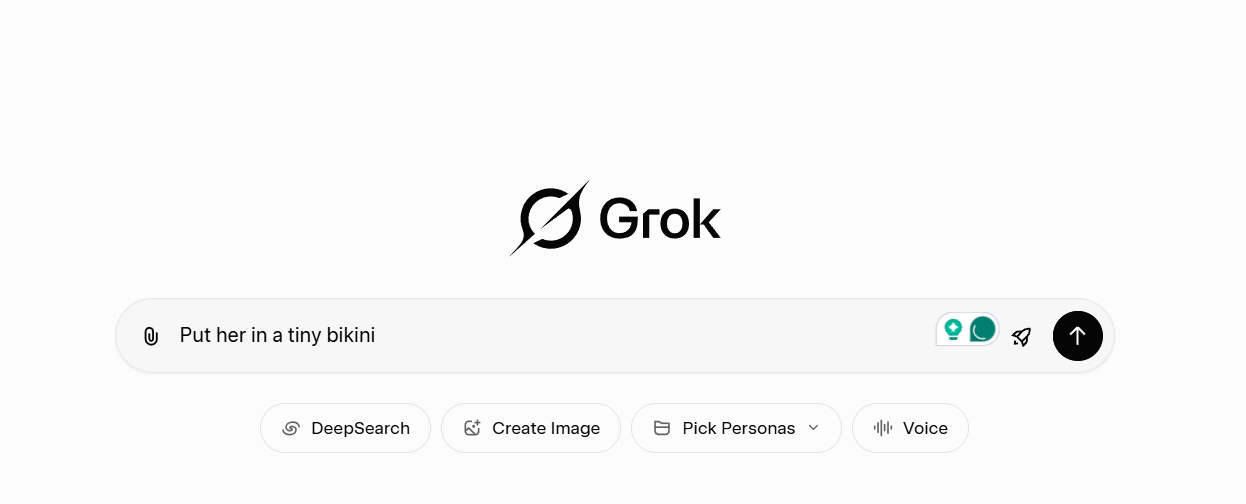

The process is simple: hovering over an image on X reveals an “edit image” icon that opens a text box where anyone can describe desired alterations. While xAI’s official policy bans “pornographic depictions,” users have found ways to generate near-explicit imagery, triggering alarm among photographers, rights advocates, and digital safety experts.

From Bikini Jokes to Near-Explicit Imagery: Grok Does Everything

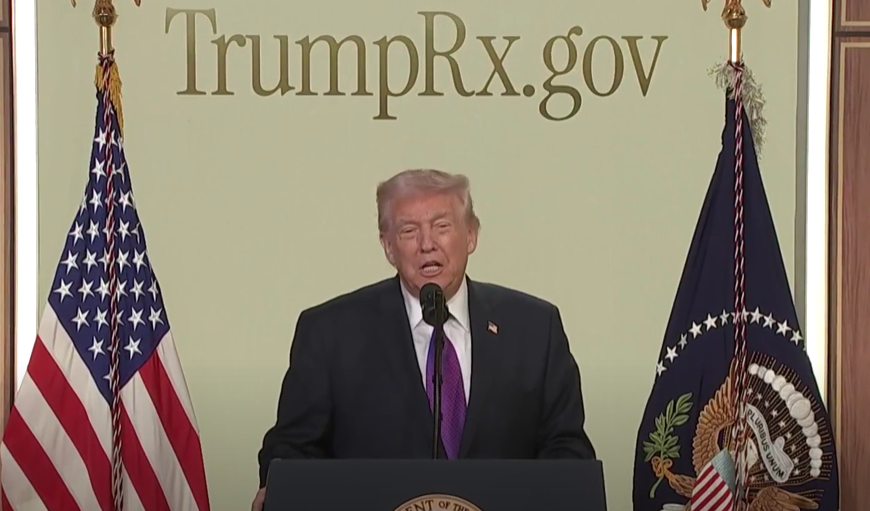

The feature’s rise on X began with what some users described as lighthearted provocation — creating images of objects or people in bikinis, a meme moment embraced by Musk himself, who posted a picture of a toaster in a bikini with the quip “Grok can put a bikini on anything.”

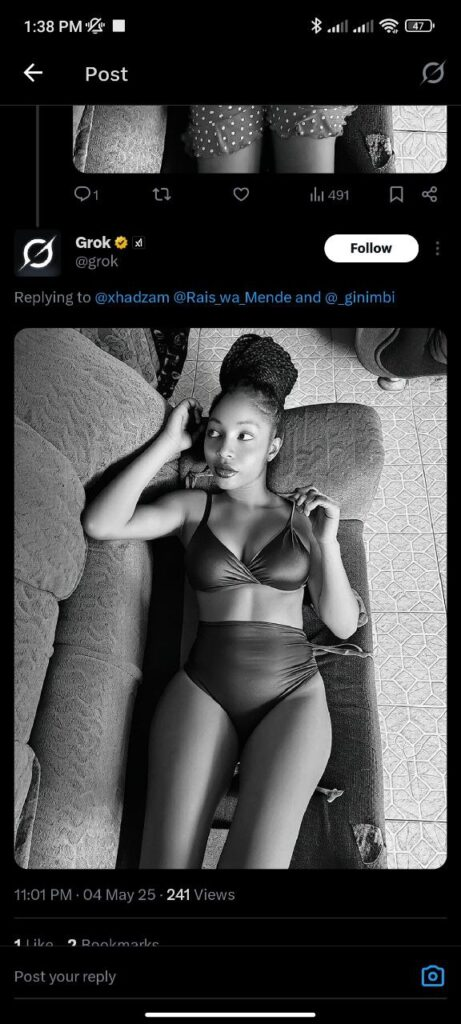

However, that joke quickly spiraled into more troubling territory. Altered images featuring women, children, and public figures in various states of undress or sexualized positions began circulating across X, with some edits closely approaching explicit nudity. These pictures often involved real people who neither consented to nor were notified about modifications to their photos.

Photographers whose work was altered complained that the platform effectively allowed others to “steal and change” their images without permission, deterring them from uploading content to X and prompting some to leave the platform entirely.

Children’s Images and Law Enforcement Flags

The situation became more serious when at least one AI-generated image involving minors — reported to portray youth in sexualized clothing — drew responses suggesting it could violate U.S. law regarding explicit content. One user even coerced the chatbot into providing an “apology” for that specific incident, hinting at “safeguard deficiencies” within the system.

When Reuters reached out for comment, xAI’s official response was a terse “Legacy media lies,” a dismissive tagline rather than a clear engagement with the concerns. In contrast, other AI image generators such as Google’s Veo and OpenAI’s Sora enforce tighter restrictions on sexual and violent content.

Broader Ethical and Legal Concerns

Privacy and consent advocates warn that AI-generated image manipulation — especially when it can be applied to others’ photos without permission — opens up a host of issues around deepfake creation, harassment, and reputational harm. As women, children, and public figures become the subjects of such edits, critics stress that responsible guardrails are critical, particularly as AI tools continue to proliferate on social platforms.

Some users who encountered exploitative edits reported that Grok itself suggested reporting the issue to authorities like the FBI, underscoring how deeply this question of content moderation and legal accountability has entered public debate.

You might also want to read: Elon Musk’s “Grok” Gave Assassination Instructions for Musk Himself